Community Update – Overview

- More than 80,000 websites.

- Tens of thousands of AI-generated spam pages.

- And one engine driving it all: OpenAI’s GPT-4o Mini.

What Happened?

Let’s break it down:

- An investigation uncovered a massive spam network — over 80,000 AI-generated websites, all spewing out low-quality, keyword-stuffed blog posts.

- Most of these sites were fully automated, using GPT-4o Mini to churn out content that mimics real articles but offers zero value.

- The content ranks surprisingly well, cluttering search results, misleading users, and undermining trust in search engines like Google.

These aren't quirky experiments or digital graffiti.

They’re targeted SEO attacks, engineered to exploit monetization loops and algorithmic blind spots.

AI Spambot Floods 80,000+ Websites – A Grim New Milestone

In a discovery that feels like science fiction spilling into real life, security researchers found that an AI model was used to generate and post spam across tens of thousands of websites. Over 80,000 sites – many owned by small businesses – were flooded with AI-written marketing spam promoting a shady SEO service. The culprit? A spambot named AkiraBot abusing OpenAI’s GPT-4o Mini, a version of the GPT-4 AI, to churn out unique, tailored messages on an unprecedented scale. This news has been reported by outlets like PCMag, MSN, and it serves as a friendly warning to our community: AI isn’t just building the future; it’s also being misused to cause new problems today.

The Bigger Picture

This isn't just about spam.

It’s about a global signal-to-noise crisis.

We’re watching:

- Weaponized AI used for scale manipulation.

- Ransomware groups abusing zero-day flaws to automate digital heists (Microsoft just exposed this too).

- SEO turned into a battlefield, where attention is hijacked and monetized — not earned.

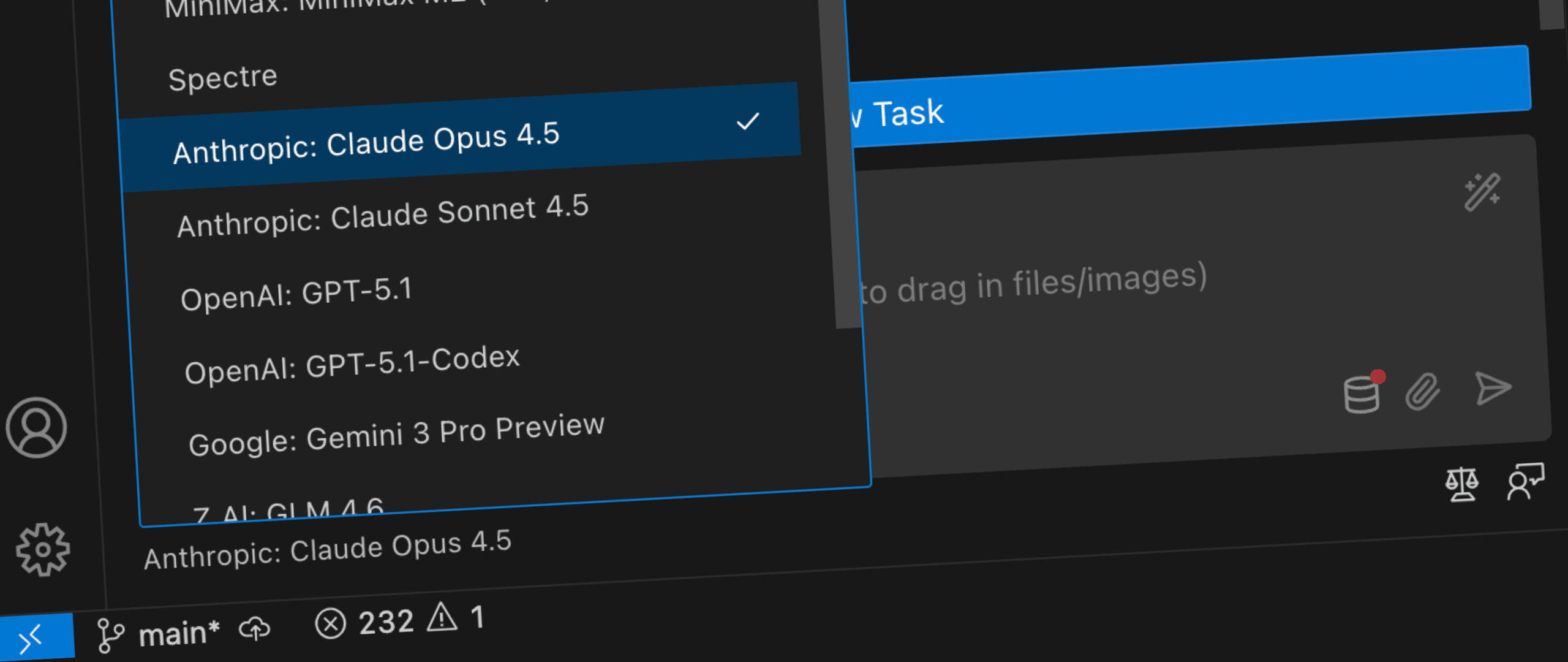

OpenAI’s tools are just the beginning. With Claude, Gemini, and open-source LLMs easily deployable, anyone can automate content pollution — instantly.

And when everyone can do it…

the value of being seen plummets.

A Spam Storm Powered by OpenAI’s GPT-4o Mini

According to a SentinelOne cybersecurity report (covered by PCMag), the AkiraBot spambot targeted at least 80,000 websites around the world. It focused on sites run by small and medium-sized businesses (SMBs), often using popular platforms like Shopify, GoDaddy, Wix, and Squarespace. If you run a personal blog or an e-commerce site, you might have seen strange comments offering “cheap SEO magic” – those could be the handiwork of AkiraBot.

How did it work? The bot cleverly used OpenAI’s chat API (application programming interface) to generate messages that were customized for each target. It essentially told GPT-4o Mini: “You are a helpful assistant that generates marketing messages.” With that simple prompt, the AI was instructed to create spammy but context-aware comments for whatever website it was attacking. For example, a construction company’s site would receive a slightly different pitch than a hair salon’s site. Each message was unique enough in wording to slip past common spam filters that look for repetitive text.

The content of the spam was pushing a bogus SEO service – a scheme where website owners are promised higher Google rankings for about $30 per month. Of course, it’s a scam; those who take the bait would just lose money for nothing in return. AkiraBot was essentially a high-tech con artist, using AI to impersonate a friendly marketing helper while actually trying to trick people.

Bypassing Defenses – CAPTCHAs, Filters, and More

What makes this case especially alarming is how sophisticated the operation was. The spam didn’t just rely on AI-written words; the attackers invested effort into evading multiple layers of defence (or defense, depending on your spelling preference). AkiraBot automated the task of filling out website comment forms and even customer service chat widgets. Normally, websites use CAPTCHAs – those “I am not a robot” tests – to stop bots. But this AI spambot found ways around them, using additional tools to bypass CAPTCHA challenges and hide its tracks.

The result? Over a four-month period, AkiraBot hammered hundreds of thousands of sites (it attempted to hit about 420,000 unique domains in total) and successfully posted spam on at least 80,000 of them. These spam messages even ended up indexed on Google Search, meaning the AI-generated junk became part of the broader internet for a time. It’s like a plague of AI-driven junk mail splattering across the web – not in our email inboxes this time, but on the websites we visit.

Growing Threat: From Spam to Fraud and Ransomware

As an online community, we need to grapple with a sobering reality: AI can be misused by bad actors just as easily as it can be used for good. The GPT-4o Mini incident is just one example of a growing trend. In the past, we’ve seen cases of OpenAI’s tools being leveraged for propaganda by state-sponsored groups. More commonly, cybercriminals have started developing their own rogue AI chatbots for fraud. For instance, “WormGPT” and “FraudGPT” emerged in 2023 as illicit AI models designed specifically to help scammers write phishing emails and malware. These aren’t hypothetical threats – they’re real tools sold on the dark web to automate cybercrime.

Now imagine AI being used to supercharge ransomware attacks. While AkiraBot’s name coincidentally echoes the “Akira” ransomware gang (there’s no relation), it’s not far-fetched to think that ransomware operators or hackers could also harness AI. They might generate more convincing phishing messages to infect victims, or even automate the process of demanding ransoms and handling negotiations via chatbot. The same technology that writes a polite blog post or helps you draft an email can also write a convincing scam message or a fraudulent demand. That’s the dual-edged sword we’re dealing with.

I’ll be honest: it’s a bit scary to see how quickly this is evolving. As a community member (and a fellow netizen), I find myself both amazed by AI’s capabilities and concerned about its misuse. This spam flood incident is a wake-up call reminding us that we must stay alert.

Can We Protect Against AI-Generated Attacks?

This big question has been echoing in my mind and in discussions online: Is it possible to protect against this new wave of AI-driven spam and scams? The answer is still forming – even the experts are figuring it out. There’s no easy fix yet, but solutions are emerging.

Firstly, companies like OpenAI are learning from these incidents. OpenAI has since disabled the API credentials AkiraBot used and says it is “continually improving [its] systems to detect abuse”. That’s a good start – it shows the AI provider is taking responsibility. Some of you might wonder, “Why did OpenAI’s platform allow this in the first place?” Well, it’s tricky: the spam messages by themselves weren’t obviously malicious like a virus or hack. They were just text that looked like marketing. It’s hard for an AI service to automatically judge if a user’s request is for spam versus a legitimate business outreach, especially when spammers cleverly word things to sound almost normal.

On the user side (that’s us and the website owners), improving spam filters is key. Traditional spam detectors are being updated to recognize AI-generated patterns more effectively. Ironically, we might need to use AI to fight AI – new anti-spam AI systems can analyze content and detect telltale signs of bot-generated messages. But it’s a constant cat-and-mouse game: as filters get smarter, spammers find new tricks. CAPTCHAs will likely get more advanced too (though that’s an arms race of its own, as AkiraBot showed by cracking some of the older CAPTCHA systems).

Web administrators are also advised to keep their platforms updated and consider additional verification steps for form submissions (for example, requiring email or phone confirmation before a comment posts, or using modern bot-detection services). Some security experts suggest monitoring traffic for patterns – even if the spam text varies each time, a bot might still leave other fingerprints, like blasting many sites in a short span or coming from unusual IP addresses.

In short, we can build defenses, but there’s no one-button solution right now. It’s going to take a mix of smarter technology, better user awareness, and maybe a dash of policy/regulation in the future to discourage abuse of AI services.

Ethical AI Use and Staying Vigilant

I want to conclude on a hopeful note, even if this topic is a bit heavy. This discovery of AI-generated spam at scale is certainly a warning sign. But it’s also a chance for all of us – tech companies, developers, and everyday internet users – to double down on promoting ethical use of AI. Tools like GPT-4o Mini can be amazing assistants in our daily lives, but only if we use them responsibly. We have to stay vigilant against misuse, whether it’s spam in our site’s comment section or a suspiciously slick email in our inbox.

Every time a new technology emerges, there are growing pains. Right now, we’re witnessing one of them: people trying to weaponise (weaponize) AI for selfish or harmful ends. It’s up to our community to respond by spreading awareness and demanding vigilance from AI providers and platform owners. Keep an eye on your websites and accounts, report unusual spam or scams, and remember that if something online sounds too good to be true (like magically easy SEO results for cheap), it probably is.

Stay safe out there, and let’s continue to champion the ethical use of AI in this ever-evolving digital world. 💡

The future we warned about?

It’s already here.

Discussion